Dan Biderman

Postdoctoral Scholar at Stanford Statistics & CS

Linderman Lab, Stanford Statistics

Hazy Research (Ré) Lab, Stanford CS

I am a Stanford Postdoc co-advised by Christopher Ré and Scott Linderman.

I build resource-efficient AI systems and apply them for neuroscience.

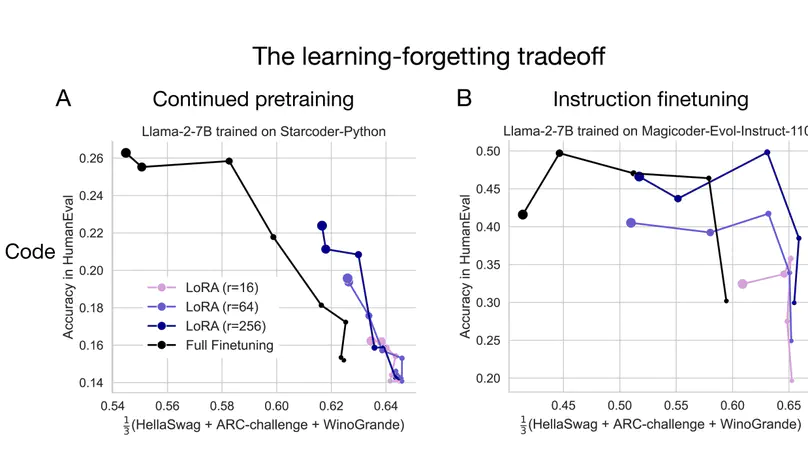

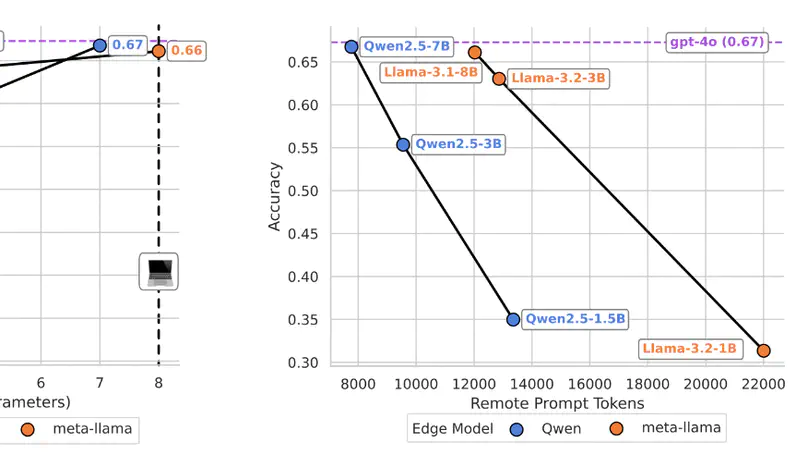

My current focus is on building language models that dynamically learn from experience. I recently shed light on learning-forgetting tradeoffs in parameter-efficient finetuning in colaboration with Databricks Mosaic AI (TMLR, 2024 (Featured Certification)), and proposed new collaboration patterns between on-device and cloud LLMs (see the Minions project, ICML 2025).

I co-organize the workshop on Efficient Systems for Foundation Models (most recently at ICML, 2025).

I graduated from a PhD at Columbia’s Center for Theoretical Neuroscience, where I worked with John Cunningham and Liam Paninski. In my main PhD project, I built deep learning models for tracking animal movement in videos - the Lightning Pose package (Nature Methods, 2024). I won Columbia’s Titus M Cowan dissertation prize in biomedical research, and served as the student speaker in the 2025 PhD hooding ceremony.

Here is my CV.

- Efficient LLMs and multi-agent systems.

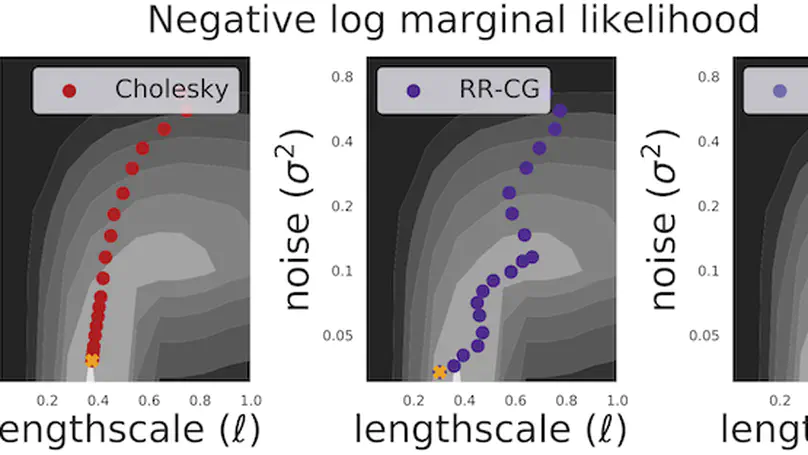

- Hardware-aware approaches to numerical linear algebra and ML.

- Modeling and analysis of biological data.

PhD in Computational Neuroscience, 2018-2024

Columbia University

MA in Cognitive Science, 2018

Tel Aviv University

The Adi Lautman Interdisciplinary Program for Outstanding Students (Cog. Sci., Math, Neurobio.), 2013-2017

Tel Aviv University

Featured Publications

Compares LoRA versus full-parameter finetuning on challenging code and math tasks; sheds light on the learning-forgetting tradeoffs. Showing that LoRA usually underperforms full finetuning in a new target domain while forgetting less of the source domain.

Small on-device LMs guided by frontier cloud-hosted LMs to solve workloads on device at the fraction of the cost.

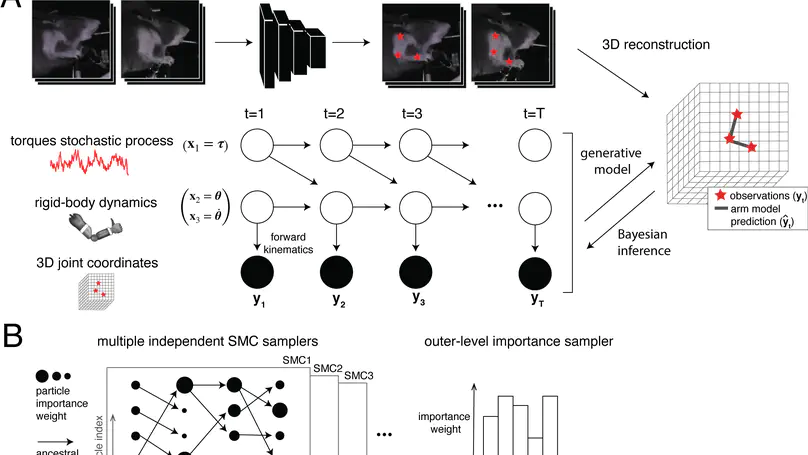

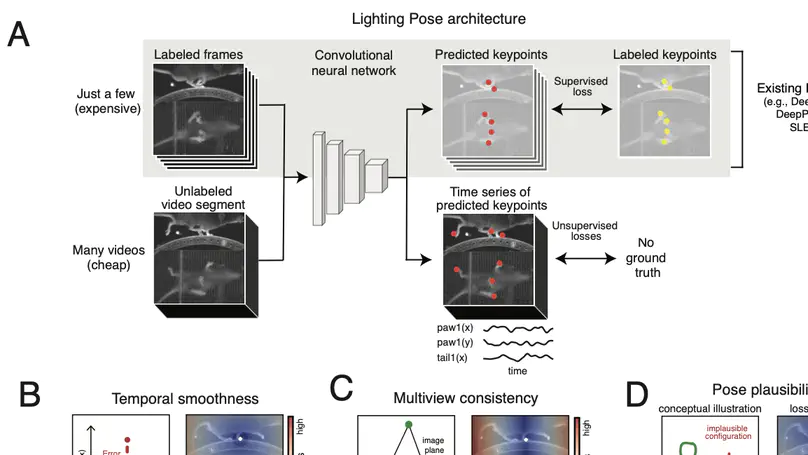

Introduces a semi-supervised approach to pose estimation, using physically-informed inductive biases to improve generalization with fewer labels. Poses are further refined by combining deep ensembles with state-space models. Open-sourcing a deep learning system that is optimized for efficiency, building on PyTorch Lightning and NVIDIA DALI.

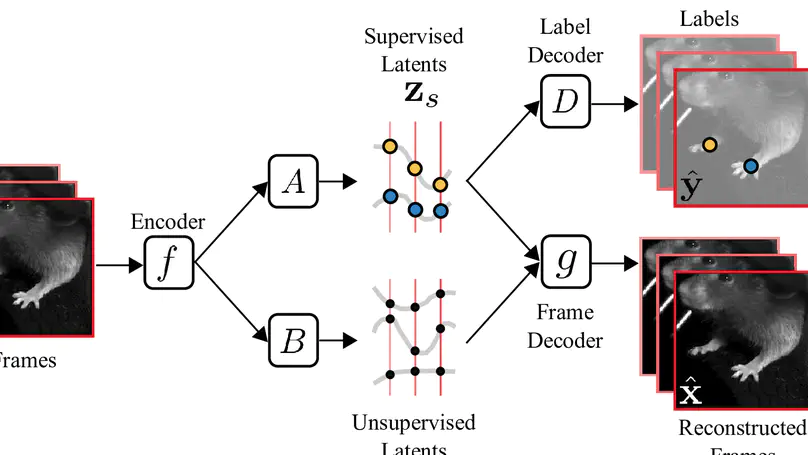

This model disentangles movement that can be quantified by keypoints (e.g., limb position) from subtler feature variations like orofacial movements. We introduce a novel VAE whose latent space decomposes into two orthogonal subspaces – one unsupervised subspace and one supervised subspace linearly predictive of labels (keypoints). The latent space additionally includes a context variable that predicts the video/subject identity.